Free Online Productivity Tools

i2Speak

i2Symbol

i2OCR

iTex2Img

iWeb2Print

iWeb2Shot

i2Type

iPdf2Split

iPdf2Merge

i2Bopomofo

i2Arabic

i2Style

i2Image

i2PDF

iLatex2Rtf

Sci2ools

CVPR

2010

IEEE

2010

IEEE

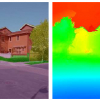

Single Image Depth Estimation From Predicted Semantic Labels

We consider the problem of estimating the depth of each pixel in a scene from a single monocular image. Unlike traditional approaches [18, 19], which attempt to map from appearance features to depth directly, we first perform a semantic segmentation of the scene and use the semantic labels to guide the 3D reconstruction. This approach provides several advantages: By knowing the semantic class of a pixel or region, depth and geometry constraints can be easily enforced (e.g., "sky" is far away and "ground" is horizontal). In addition, depth can be more readily predicted by measuring the difference in appearance with respect to a given semantic class. For example, a tree will have more uniform appearance in the distance than it does close up. Finally, the incorporation of semantic features allows us to achieve state-of-the-art results with a significantly simpler model than previous works.

Related Content

| Added | 01 Apr 2010 |

| Updated | 14 May 2010 |

| Type | Conference |

| Year | 2010 |

| Where | CVPR |

| Authors | Beyang Liu, Stephen Gould, Daphne Koller |

Comments (0)